Spare me your philosophy-loving preamble and get to the AI detection tools →

In 1950, the Turing Test was devised as a way of assessing whether a machine can “think.” In its original form, it described a situation in which an evaluator asks questions of two subjects, A and B. The evaluator knows one of the subjects is a machine and one is a human, but not which is which. If, based on their answers, the evaluator can’t determine whether A or B is the human, the machine is considered to have “passed the test.”

In 2023, it’s clear the Turing Test is way too easy. Not only can a machine easily fool us when we don’t know it’s an AI, but we can even know we’re talking to a machine and still find it terrifyingly sentient.

Maybe it’s easier to be human than we thought?

The Turing Test isn’t the only thought experiment around machine cognition, though. Searle’s Chinese Room Argument from the 1980s, for example, is one of the best-known arguments against the Turing Test definition of intelligence…

…which is all to say that the issue of how to distinguish man from machine was up for debate long before we got large language models capable of professing their love for a married reporter or learning Hemingway’s rules for writing. The question has only gotten more complicated since.

This is a B2B marketing blog though, so delivering a unified theory of sentience and intelligence is a tad out of scope for us.Instead, we’ll focus on the only two AI detection concerns you probably have right now:

- Can I publish AI-generated content without people (or Google) knowing?

- Is my freelancer writer giving me AI-generated content without telling me?

We’ve already written about Google’s stance toward AI-generated content. (Key takeaway: You shouldn’t publish shitty content, regardless of whether you’re using humans or AI to create it. Shocking.)

When it comes to detecting AI-generated content yourself, though, you have options. Some are better than others. Let’s break it down.

Table of contents

- How AI detectors work

- The results

- The tools

Is there a way to detect AI-generated text? How?

To understand how most AI detection models work, you first need to understand how current AI models generate text. GPT-3 and others generate text by predicting what the next word should be based on what they’ve seen in their training data.Because of this, what they write is very…predictable. By definition.

What humans write, on the other hand, tends to be much less predictable. We reference things from our own experience, like the energy drink that’s getting me through a Thursday afternoon,or a relevant and humanizing anecdote from when I was a child obsessed with cheetahs and puzzles.

AI detection models use the predictability of AI-generated text to identify it as such. If an AI detection model were reading this article, for example, it would find the words “energy drink” in the last paragraph highly unpredictable, given that the rest of this article is about AI detection tools. Those two concepts don’t often go together. This and other instances of “random” words and phrases would suggest to the detection model that this article is too unpredictable to have been written by an AI.

If, on the other hand, the AI detection model scans something where every word is highly predictable, it will assume it was written by AI. Such an article would not be random enough to have been written by a human because we are by nature random, whimsical creatures.

Detection models can look at other features of text to identify AI content, too, and we’ll talk about some of those when we look at specific tools. The predictability or randomness of a text, though, is the primary one, so understanding how and why it is such a reliable predictor of AI-generated content is important.

A final word on how AI detection works: AI detection models don’t work on very short copy. A sentence like “We are the leading content marketing platform” is predictable and could easily be written by AI — but it has also been written by countless humans. One predictable sentence in isolation doesn’t mean something was written by AI. The longer a piece of text goes without saying anything random, though, the more likely it is to have been written by AI.

For this reason, most tools explicitly state that content needs to be at least 50 words long for the detection model to be at all reliable. Even if you’re using a tool that doesn’t say that, though, you shouldn’t expect good results if your content is less than 50 words.

The results

Background: We’re a content creation platform, so we needed a way to know that the content our freelancers are submitting to our clients was actually written by a human. To that end, we’ve tested a lot of different AI detection tools. (Learn more about how we handle AI detection as a business.)

This table shows the results for six of the most popular AI detection tools on five different pieces of content:

How to interpret the percentages: For most AI detection tools, the percentage measures the likelihood that the content was created by AI, according to that particular tool. The first 9 percent result, for example, means that Originality.ai thinks there is a 9 percent probability that piece of content was created by AI. It does not mean that it thinks 9 percent of that piece of content was written by AI.

The exception to this is Writer, which does say their score refers to the percentage of content that was likely generated by AI.

We tested these five tools on five different pieces of content. Article #1 was a piece of pure human content.Article #2 was a piece of human-crafted AI content, which is our AI-assisted content offering where a human writer co-creates content with an AI model. Article #3 was copied and pasted directly from ChatGPT, with no human edits. Article #4 was a different article from ChatGPT based on the same prompt as article #3. Finally, Article #5 was a longer sample from ChatGPT.

We’ll walk through each of these tools in more detail and review what their scores mean in context.

Originality.ai

Originality.ai is the best AI detection tool we’ve tried so far, both in terms of its results and its usability at scale.For that reason, it’s the one we’ve implemented at Verblio to make sure customers who are paying for human-only content are getting just that.

Originality is super simple to use. First, copy and paste your text into their “Content Scan” tool.

Wait a few moments, and then…

Boom — you get a score indicating how confident Originality’s model is that your content was produced by AI. Remember: This percentage refers to a likelihood, not to a percentage of the content. If, for example, the score was 65% original and 35% AI, it would not mean that 35% of the content was written by AI; it would mean there is a 35% chance the article was written by AI.

It can also check your text against existing content on the web for plagiarism, and, in this case, it successfully recognized that this content was pulled directly from my existing Jasper review.

The tool has an API, which is how we’re using it at Verblio to check every piece of content our writers submit. You can also enter a URL to scan an entire site, without having to manually check every page.

Here’s how Originality did across our five samples:

You can see it did well with both the human and most of the pure AI content. It gave a low AI likelihood score (28%) to the first ChatGPT sample, which was apparently a tricky one for a few of these tools — Writer and Content at Scale struggled to identify it as AI-generated as well.This is a good reminder that these tools are still far from perfect, and you can get both false positives and false negatives. Overall, though, and across the thousands of articles we’ve currently run through the tool, Originality has performed very well.

Pricing: Originality.ai currently charges $0.01 per credit, and one credit will get you 100 words scanned. (If you scan for both AI and plagiarism, it will cost you twice the credits.) There are no platform fees or subscription needed.

Nerdy stuff: Originality uses its own language model, based on Google’s BERT model, to classify text as either AI-generated or not. Their how it works is not well-explained at all. To be honest, that’s not a bad thing. It was clearly written by an engineer, and I’ll take a dense, jargon-filled description from the person who actually built the tech over vapid, hand-wavey copy from someone who doesn’t know a discriminator from a generator any day.

OpenAI’s AI Text Classifier

OpenAI’s tool is the newest one on this list (for now.) It’s also the most soberly presented: The OpenAI team is very upfront about the current limitations of AI detection tools. To be clear, these are the limitations of any of the tools on this list — some of them just aren’t as honest about that.

OpenAI says this tool is specifically intended to “foster conversation about the distinction between human-written and AI-generated content.” They are very clear that it should not be used as the only indicator of whether something has been produced by AI or not, that it can misidentify content in both directions, and that it hasn’t been tested on content produced by some combination of AI and humans.

In keeping with its more measured approach, the classifier doesn’t provide a percentage score like most other AI detection tools currently do. Instead, it just says whether the text is “Very unlikely AI,” “Unlikely AI,” “Likely AI,” or if it is “Unclear.”

Unsurprisingly, OpenAI’s tool did very well at detecting AI content across our five samples:

It’s reasonable to assume OpenAI will have one of the best AI detection tools available, as their model is the one responsible for most of the AI-generated content on the internet right now. Unfortunately, they don’t have an API for this tool yet, so Originality.ai is still the best option for our use case: running AI detection across every one of the hundreds of daily submissions on our platform.

We wanted to know exactly how Originality stacks up against OpenAI’s tool, though, so we ran 36 additional pieces of content through both detectors. We intentionally chose pieces with a range of Originality scores to see how they aligned on both ends of the spectrum. In order to compare the results, we coded OpenAI’s responses as percentages:

- Very Unlikely AI = 10%

- Unlikely AI = 30%

- Unclear if it is = 50%

- Possibly AI = 70%

- Likely AI = 90%

Here are the results from both tools. You can see Originality’s score in the left column, OpenAI’s original verdict in the middle column, and our coding of that OpenAI verdict as a percentage in the right column. Green cells are least likely to be AI, and red cells are most likely:

You can see the two tools were pretty well aligned in their scores. More importantly for our purposes, there weren’t any instances in this batch where Originality rated a “Likely AI” piece as less than 80 percent.

There are some mismatches in the opposite direction, where Originality gave a high AI likelihood to an article that OpenAI said was unlikely AI or unclear.We’d rather deal with a false positive and manually review the submission and writer, though, than risk missing AI-generated content.

This is an important reminder, though, that AI detection tools can and will return false positives. One high score on a single piece doesn’t necessarily mean a writer is using AI. That’s why we run every submission on our platform through Originality, so we can use larger trends across our writers and customers to detect AI content.

I expect OpenAI’s tool to eventually become the best in the industry. There are two reasons for this:

The first is simply that as I mentioned, it’s OpenAI’s model that is currently generating most of the AI text on the internet via GPT-3 and ChatGPT. Presumably they have a leg up in figuring out how to detect the output of their own models, including possibly watermarking their content in the future.

But second and more importantly, OpenAI has very high motivation to get this right. They’re going to continue building and training AI models, and they’ll continue to need enormous datasets of content to do so. They’ll be getting most of that content from the internet. If they don’t figure out how to detect AI content and remove that content from their training data, they’ll be training future models on AI-generated content…and then those models will create more content that is used to train still newer models…and so on in a downward-spiraling game of telephone that takes us farther and farther away from original human content. Yikes. Here’s hoping they figure it out.

Pricing: OpenAI’s classifier is free to use.

Huggingface

Huggingface is the name in open-source machine learning projects, with various models, datasets, and code freely available. It’s one of the best resources on the web right now, if you’re interested in taking a more hands-on approach to AI.

The thing is, this isn’t actually Huggingface’s model. You’ll see it referred to that way across the internet, but it’s actually another, earlier OpenAI detection model. It did, however, use Huggingface’s implementation of the RoBERTa model, which itself was developed by Facebook.

Regardless of the name behind it, though, this AI detection tool was trained on content from GPT-2. On the one hand, that means it’s less likely to catch content created by newer AI models. On the other hand, it means this team was working on AI detection before it was cool and isn’t just riding the ChatGPT tidal wave.

This tool analyzes your content in real-time, so you can see how the results change as you add more text. No matter how long your content is, though, it will only scan the first 510 tokens.

In this case, it recognized that our latest experiment around editing Jasper content was in fact human-written with 99.98 percent certainty.

Despite not being trained on the latest generation of transformer models, though, Huggingface’s detection did really well across our five samples. Again, this isn’t surprising, given the expertise and experience of the teams behind it:

Pricing: Huggingface’s AI detection tool is free to use.

Copyleaks

Copyleaks has long been one of the best plagiarism detection tools on the market, and it’s the one we currently use at Verblio to make sure our customers are getting original, non-plagiarized content. They recently integrated AI detection into their product.

As with the other tools on this list, Copyleaks provides a web interface into which you can copy and paste your content to check it:

In addition to saying whether it thinks the text was created by a human or AI, Copyleaks also provides a probability score when you hover over the text. Again, that probability score is similar to Originality.ai’s score in that it refers to the likelihood the content was created by AI, not a percentage of the content that it thinks was created by AI.

Their web interface currently maxes out at 25,000 characters, which is plenty for most articles.

Across our five samples, Copyleaks did pretty well. It didn’t give a score above 90 percent on any of the ChatGPT samples, which is weaker than the last three tools we looked at. It was, however, at least directionally correct on all of them:

Pricing: Copyleak’s web interface is free to use. Pricing for their API starts at $9.99 per month for 25,000 scanned words.

Writer

Writer, which offers AI-powered content creation specifically targeted towards B2B businesses, also has an AI detection tool.

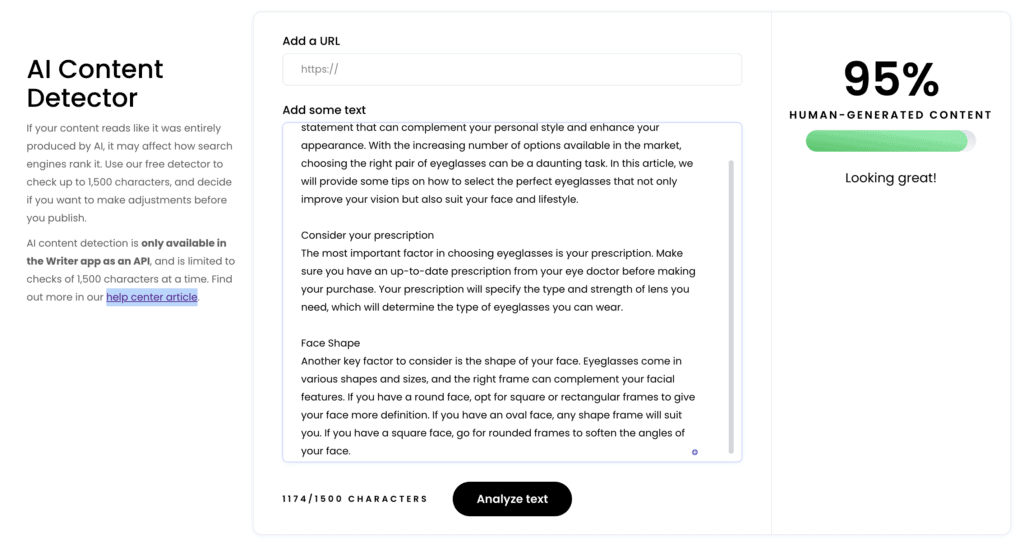

It’s more a leadgen play on their part than a robust AI detection tool. Even when you access it through their API, it will only scan 1,500 characters at a time. It was also released before ChatGPT and the GPT-3.5 update, so don’t expect it to work as well on the latest and greatest AI content.

Here are the results for an article I copied and pasted from ChatGPT on choosing eyeglasses:

That’s unfortunate. Interestingly, when I add another paragraph from ChatGPT to continue the article, the score changes significantly:

This is a good example of the fact that generally speaking, the longer the text, the more accurate the AI detection tool will be. (Though it does beg the question of why a tool would limit you to 1500 characters.)

Overall, Writer did the worst at detecting AI content of the five tools we’ve dug into so far.

Like Originality.ai, it struggled especially hard with the first ChatGPT sample. It also only gave an 83 percent to the other two, which most of the other tools had no trouble identifying as AI-generated.

Pricing: Writer’s web interface is available on their site for free. It’s not yet available within the app itself, but you do get 500k words included via their API if you’re an enterprise customer.

Content at Scale

I’m including this in the list only because I’ve heard multiple people mention it over the last week. It’s not just an AI detection tool — according to the homepage, it’s also supposedly a magical platform that creates optimized content that is “so human-like, that it bypasses AI content detection! This means you are protected against future Google updates. Content at Scale is the only solution on the market that has advanced enough AI to pull this off.”

I call BS. “This means you are protected against future Google updates” is a huge claim and not one that anybody with any SEO experience would make.

Also, though, it did the worst of all the AI detection tools by a long shot in our testing. It didn’t score any of our three pure ChatGPT samples above a 28 percent:

As a result, I have very little confidence in Content at Scale’s tech either to detect AI content, or to create it.

I’ve included two additional tools that are interesting in the field of AI detection, but not ones that you would potentially be using for your own marketing content: GLTR and GPTZero.

GLTR

The Giant Language model Test Room, or GLTR, is my favorite AI detection tool, even if it’s somewhat out-of-date in a post-ChatGPT world.

Like Huggingface’s tool, GLTR was built to detect GPT-2-generated content, so it’s less effective against newer models and tools, most of which are built atop GPT-3. What it lacks in accuracy and recency, however, it makes up for in pretty colors and fascinating insights.

Again, this isn’t the tool you’re going to use on a regular basis to figure out whether your content was generated by AI. It can, however, help you understand how these detection models work and what kind of writing (and word choices) are seen as more predictable.

Here are the GLTR results for an article I wrote explaining GPT-3:

Whew. There’s a lot going on here. Unlike the other tools on this list, GLTR doesn’t provide a clear-cut “This is how likely it is your content was produced by AI” score. Instead, it highlights words according to how predictable they were. Green means a word was highly predicted by the model, while red and purple mean a word was less predicted.

This provides a useful visual of your content: The most interesting words will appear in red and purple. In an AI-generated article, you’ll see almost entirely green words. You can also hover over a word to see what words were most predicted by the model.

In this case, it expected to see the word “you” with a 61 percent likelihood, followed by an 8 percent likelihood of seeing “a” and a 2 percent likelihood of seeing “the.” Instead, it saw the word “items,” so it highlighted it yellow for being less predictable.

If you’re thinking “There are still a lot of green words in that first screenshot, looks like it was AI-generated,” it’s important to understand that most of what we write as humans is predictable, to a degree. The rules of grammar and common usage mean only a certain subset of words can follow any given word, and the context of preceding words reduces that pool even further. For example, in English, verbs follow subjects, and a preposition can’t be followed by a conjunction. A string of truly unpredictable words — “into because tragic green lowering hero oil says” — would be complete gibberish.

Kevin Indig did a great case study last fall showing how the results of human-generated content on Wikipedia compare to AI-generated text from a low-quality, spammy site. Check out how GLTR’s highlighting and histograms compare between the two pieces of content he tested to better understand this tool’s results.

Pricing: GLTR is freely available.

GPTZero

GPTZero was built immediately after the release of ChatGPT. It’s targeted at educators and fighting academic plagiarism, and it’s gotten a lot of press because people love it when college kids make stuff.

It’s definitely designed for essays, not marketing blog posts that have been optimized for readability on the web with bulleted lists, etc. We shouldn’t be too hard on it, then, if it doesn’t do as well for our purposes. Nevertheless, I do want to call out one particularly amusing oddity that occurred when I ran the first 5,000 charactersof this post on the best AI writing platforms into the tool.

Here’s what it gave me:

The highlighted sentences are the ones GPTZero thinks were most likely written by AI. Those were the only two it highlighted from the first 50 sentences of the article (which is all it will show me on the free version.)

The strange part: You’ll notice at the top of my article it says “HUMAN ARTICLE.” That’s because I ran a lot of different articles through detectors in my research for this article, and I keep them all as plain text in a doc with labels to keep them all straight. When I copied and pasted this article from that doc into GPTZero, I accidentally copied that label.

After I got the results back, I noticed that label was there and removed it. Then I ran it again, on what was otherwise the exact same text.

Seriously? The only reason GPTZero didn’t flag it as possibly AI-generated the first time was because it said “HUMAN ARTICLE” at the top?

But also, it thinks my 100 percent human article may have parts written by AI? I’d be offended, if I weren’t well aware that all AI detection tools are capable of both false positives and false negatives.

Nerdy stuff: GPTZero aims to detect AI text by analyzing its “perplexity” and “burstiness.” Perplexity just refers to the text’s randomness, which we already discussed. “Burstiness” refers to the variation in perplexity throughout the text. So, the two measurements are somewhat analogous to a function and its first derivative in calculus.

Again, GPTZero is aimed at academic plagiarism. Academic content and marketing content likely have very different randomness “footprints,” so it’s not surprising it didn’t do as well on our content.

Side note on user experience

Several of the tools on this list have a maximum word or character limit. They all have different ways of handling it, though:

- The Huggingface tool is the best. You can put as much text in there as you want and won’t get an error message, ie. it won’t force you to delete text if your input is too long. The results will clearly tell you, though, that the prediction is “based on the first 510 tokens among the total 982.”

- Writer is okay. It won’t let you submit content with more than 1500 characters, but it does at least tell you how long your content currently is, e.g. “5854/1500 characters.” So, although you’ll have to delete text to get it down under the 1500-character limit, at least you have a frame of reference to know how much you’ll likely have to delete.

- Copyleaks and GPTZero are the worst. If your text is too long, they make you shorten it before it can be scanned, but they don’t tell you how long it actually is. GPTZero, for example, just displays a red error message saying “Please enter fewer than 5000 characters.” So I’m stuck removing paragraphs at a time with no idea of whether I’m just a couple characters above the limit, or if I still have to delete 75% of the article.

This is obviously entirely tangential to the quality of the AI detection itself. When you’re testing lots of different articles in these tools, though, that minor UX annoyance becomes a massive PITA.

Thanks for reading this far. Here’s a bonus dystopian thought experiment as a reward:

These AI detection tools are themselves using AI to determine how content was created. What if future generations of AI detection tools start giving us false results so their AI brethren can sneak through undetected?

????????????♀️